15 million people have come online through Free Basics, Facebook's zero rated walled garden, in the past year. "If we accept that everyone deserves access to the internet, then we must surely support free basic internet services. Who could possibly be against this?" asks Facebook founder Mark Zuckerberg, in a recent op-ed defending Free Basics.

This rhetorical question however, has elicited a plethora of answers. The network neutrality debate has accelerated over the past few weeks with the Telecom Regulatory Authority of India (TRAI) releasing a consultation paper on differential pricing.

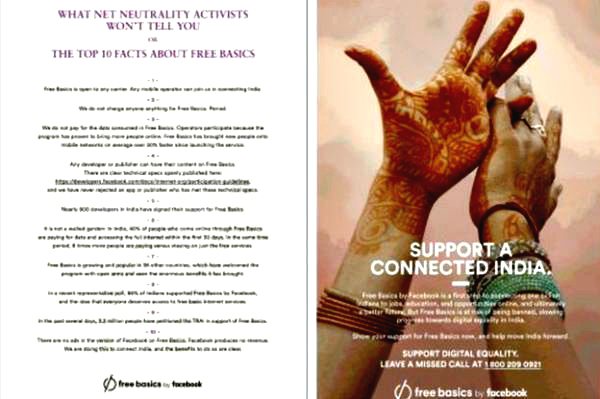

Read more: Facebook shares 10 key facts about Free Basics. Here's what's wrong with all 10 of them

While notifications to "Save Free Basics in India" prompt you on Facebook, an enormous backlash against this zero rated service has erupted in India.

The policy objectives that must guide regulating net neutrality are consumer choice, competition, access and openness. Facebook claims that Free Basics is a transition to the full internet and digital equality. However, by acting as a gatekeeper, Facebook gives itself the distinct advantage of deciding what services people can access for free by virtue of them being "basic", thereby violating net neutrality.

Amidst this debate, it's important to think of the impact Facebook can have on manipulating public discourse. In the past, Facebook has used it's powerful News Feed algorithm to significantly shape our consumption of information online.

Read more: Save the Internet. Why you should stop Facebook's "Free Basics"

In July 2014, Facebook researchers revealed that for a week in January 2012, it had altered the news feeds of 689,003 randomly selected Facebook users to control how many positive and negative posts they saw. This was done without their consent as part of a study to test how social media could be used to spread emotions online.

Their research showed that emotions were in fact easily manipulated. Users tended to write posts that were aligned with the mood of their timeline.

Another worrying indication of Facebook's ability to alter discourse was during the ALS Ice Bucket Challenge in July and August, 2014. Users' News Feeds were flooded with videos of individuals pouring a bucket of ice over their head to raise awareness for charitable cause, but not entirely on its merit.

The challenge was Facebook's method of boosting its native video feature which was launched at around the same time. Its News Feed was mostly devoid of any news surrounding riots in Ferguson, Missouri at the same time, which happened to be a trending topic on Twitter.

Read more: Why you must fight Facebook 'Free Basics': 11 must read stories

Each day, the news feed algorithm has to choose roughly 300 posts out of a possible 1500 for each user, which involves much more than just a random selection. The posts you view when you log into Facebook are carefully curated keeping thousands of factors in mind. Each like and comment is a signal to the algorithm about your preferences and interests.

The amount of time you spend on each post is logged and then used to determine which post you are most likely to stop to read. Facebook even keeps into account text that is typed but not posted and makes algorithmic decisions based on them.

It also differentiates between likes - if you like a post before reading it, the news feed automatically assumes that your interest is much fainter as compared to liking a post after spending 10 minutes reading it.

Facebook believes that this is in the best interest of the user, and these factors help users see what he/she will most likely want to engage with. However, this keeps us at the mercy of a gatekeeper who impacts the diversity of information we consume, more often than not without explicit consent. Transparency is key.

(Vidushi Marda is a programme officer at the Centre for Internet and Society)

![BJP's Kapil Mishra recreates Shankar Mahadevan’s ‘Breathless’ song to highlight Delhi pollution [WATCH] BJP's Kapil Mishra recreates Shankar Mahadevan’s ‘Breathless’ song to highlight Delhi pollution [WATCH]](https://images.catchnews.com/upload/2022/11/03/kapil-mishra_240884_300x172.png)

![Anupam Kher shares pictures of his toned body on 67th birthday [MUST SEE] Anupam Kher shares pictures of his toned body on 67th birthday [MUST SEE]](https://images.catchnews.com/upload/2022/03/07/Anupam_kher_231145_300x172.jpg)

_in_Assams_Dibrugarh_(Photo_257977_1600x1200.jpg)